HDR Projectors Explained: Everything you want to know about HDR10, Dolby Vision, HDR10+ and HLG

It seems that the home theater entertainment industry loves a good format war. Betamax versus VHS, Blu-ray versus HD DVD, and now we're in the middle of another battle. HDR is the new thing in movies and TV. And it brings not just one, but depending on how you count them, somewhere between 3-5 competing standards.

So today we're going to break down HDR, what it is, what do those standards mean and what to know before you buy your next home theater projector.

What does HDR stand for?

HDR stands for high dynamic range.

What does HDR Do?

HDR increases the range between the brightest whites and the darkest blacks on a projector or other media display. With HDR, video creators gain more control over how bright and colorful certain parts of an image can appear. This makes it possible for a home cinema viewer to more accurately see what the media creator saw in real life.

What is HDR?

With 4k now standard for new projectors and TVs, HDR is the new visual technology that manufacturers are pushing. Essentially HDR lets your video display show a more vivid lifelike image. If you want the best looking movies and TV you need to get a UHD projector that can handle HDR.

However HDR itself isn't just one thing. All of the different types of HDR out there are based on four main concepts, luminance, dynamic range, color space, and bit depth.

Luminance

Luminance is a measure of how much light something emits measured in nits.

Nits are a unit of measurement roughly equal to the light emitted by a single candle. You'll usually see the brightness of a projector being discussed in Lumens but for simplicity sake with TVs we'll be using nits. For reference 1 Nit is approximately the equivalent of 3.426 Lumens.

Back in the day, CRT TVs were around a hundred nits and incidentally 100 nits is also what standard dynamic range video was calibrated to. However on a capable TV or projector, HDR content can be much, much brighter. Manufacturers usually refer to this as the peak brightness.

With HDR content, video, things like reflections, headlights in the dark, laser blasts and whatnot should all look extra bright. So HDR projectors can get way brighter than the standard dynamic range, especially when playing HDR content, but how exactly that brightness is used brings us to the second part of high dynamic range, Dynamic range.

Dynamic Range

Dynamic range is basically contrast or the difference between the brightest and darkest parts of a scene. Dynamic range is typically measured in stops, which is a term brought over from cameras. Each stop is twice as bright as the one before it, these days, a lot of camera companies brag about new cameras being able to deliver 12 to 15 stops.

It may surprise you to learn that standard dynamic range content, which is still the vast majority of media out there, tops out at about six stops.

Manufactured frequently report stops in terms of contrast ratio 5,000:1, 10,000:1, etc.. This ratio is how much brighter the 'white', a projector can display is than 'black'.

More stops or a bigger ratio means you can have more contrast and more subtle detail, especially in the shadows and highlights.

Dynamic range is partly dependent on the Projector's contrast ratio, but at least one HDR standard insists that a TV has to be capable of 13 stops of peak dynamic range to be considered HDR ready. Though to be honest, most are closer to 10 stops.

Color Space or Color Gamut.

The color space is the range of colors that a video projector can produce. To simplify a bit color gamut defines the maximum saturation for the primary colors, red, blue, and green that the device can display.

Most SDR video and digital content (including .jpgs and most media on the web) use a color space called rec. 709 or sRGB. The big HDR video standards all support a wider gamut up to one called Rec. 2020 or BT.2020. However a lot of the content actually uses the slightly less wide standard called DCI-P3.

These recs are standards developed by the ITU or international telecommunication union, which is actually a branch of the United nations. There are more than a dozen recs, also sometimes prefixed with ITU or BT, which are recommendations that manufacturers try to follow to make sure, sure content renders properly on their screens. They are just recommendations though. And there are still displays out there that can't even show a 100% of the colors in Rec. 709. If you're going to get a 4K projector make sure it does over 100% of the Rec.709.

The difference between a normal and wide color gamut is kind of equivalent to luminance, but for color. Instead of brighter highlights, for example, an HDR projector should be able to project a color that has a more pure hue and higher saturation. So your greens are more 'green', your blues are more 'blue', and your reds are more 'red.'

Bit Depth

The last feature of HDR is bit depth. This is the amount of data used to describe the brightness and color with the exception of some professional video SDR content was all eight bit. The easiest way to think about this is with RGB color, where each pixel gets a value for red, green, and blue. In an 8 bit signal you have 256 possible values for each color, which is two to the power of eight, which is why it's called eight bit.

So 0,0,0 Would be black, while 255,255,255 is white and 0,0,255 would be pure blue, et cetera.

HDR uses 10-bits of data for each channel, expanding the possible range of values out to 210 or roughly 0 to 1023. So you're looking at around four times the data being used to describe each pixel on your screen. The big advantage here is more subtle gradients with less banding and providing all the data needed for those super bright highlights.

The Different HDR Standards

As we mentioned, there are currently 5 HDR standards. They are: HDR10, HDR10+, Dolby Vision, HLG and Technicolor Advanced HDR.

Technicolor Advanced HDR is an HDR format which aims to be backwards compatible with SDR. There is very little content in this format so we'll focus on the other 4.

HDR10, HDR10+ and Dolby Vision

HLG or hybrid log gamma is kind of its own thing, but HDR10, HDR10+ and Dolby Vision are all based on something called PQ or the perceptual quantizer. To explain this, we are going to have to talk about the somewhat technically complicated gamma curve. When a digital camera records an image photons hit the sensor and get turned into voltage, and the camera records that voltage. Twice the voltage and the pixel will be twice as bright.

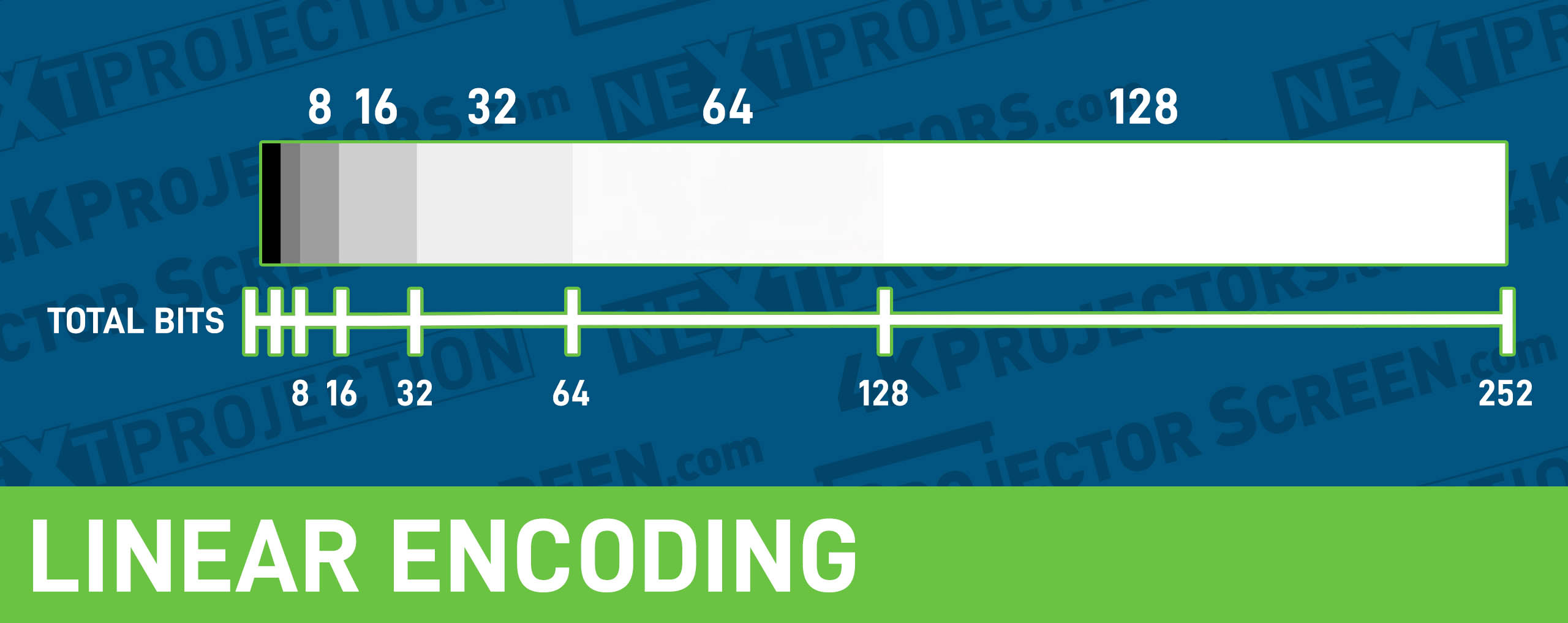

This is called a linear response and it's how digital images are stored.

If we imagine an 8-bit image, let's say four bits is the darkest signal. The camera can register. So twice as bright as that to medium gray is eight bits, double that to 16, then 32, 64, 128. And that doubles to 252, which is essentially all the data we can record. And that's our white point.

But there's a problem here.

At the very bottom of the scale. We only have four bits to show the difference in gradient between black and gray while we've got 128 bits to show the difference between white and nearly white in the highlights. Linear encoding spends most of its data on the lightest few shades in an image and almost none on the shadows. And unfortunately this is exactly the opposite of how our eyes work. We are extremely sensitive to changes in the lower end of our vision, but above a certain brightness point, we barely perceive any difference.

A gamma curve, at its simplest, is an equation that bends that linear response and tries to bring it closer to how we actually see. It takes data away from the bright end of the spectrum and moves it into the darker end of the spectrum, where we actually see the most detail.

Cameras these days frequently use a curve to help them spend more data, to capture shadows. When you actually take an image and then your computer uses the opposite curve to make sure it displays correctly. The perceptual quantizer is like a gamma curve on steroids.

It was designed primarily by Dolby and it's based on how people actually perceive contrast at various luminance levels. It's supposed to be a closer analog to how your eyes actually work. And it comes with way more complicated math at 10-bits, PQ uses twice the data of all of SDR, over 500 bits, to describe just zero to 100 nits in brightness, but then it keeps going with headroom built in to extend all the way to 10,000 nits of brightness.

So what are the differences between these three standards based on PQ?

HDR10 is an open standard, which has made it the most popular form of HDR.

If a TV or Projector says it supports HDR, you can bet it at least means HDR10. The downside is it's not rigidly defined. It's more a collection of suggestive technology than a true standard. So the quality of HDR10 devices and content can vary widely. Still at a minimum HDR10 should include 10 bit video, a wide color gamut, and it generally targets peak brightness of 1000 nits.

Dolby Vision by contrast is completely controlled by Dolby. It supports up to 12-bit video. However even if a TV can accept a 12-bit signal, a lot of content and the actual displays are still only 10-bit. And it's usually mastered to a peak brightness of 4,000 nits. So Dolby Vision is potentially brighter than HDR10, but beyond that, if a company wants it's to support Dolby vision on their device, they do have to pay a license fee, but in return, Dolby will work with them to make sure, sure their projectors, TVs or monitors will properly and accurately render and playback. Dolby Vision content.

This does lead to one of the quirks of PQ. Gamma curves were relative. They've modified the brightness of an image, but they had no innate values attached to them. PQ is an absolute, every value between 0 and 1023 returns, a specific brightness, no matter the display. So an input of 519 bits should always display a brightness of 100 no matter where you're showing it. But this leads to a big problem. Remember, Dolby Vision is mastered to 4,000 nits and no consumer TV or projector can come close to that. Heck plenty of them can barely reach the thousand nits of HDR10. So how do you solve this? Metadata! HDR10 video carries metadata that describes the average scene brightness and the maximum pixel brightness in whatever movie or show you're watching. TV and projector manufacturers then include algorithms that try to map that maximum brightness onto the brightest shade your TV can actually produce.

It's essentially a gamma curve on top of the PQ curve. The downside here is that if you can imagine a generally dark show that has one really bright flash in one scene, the TV has to account for that single bright light. And it may shift the rest of the content darker to compensate to try to fix this Dolby Vision adds dynamic metadata, which shows scene by scene or even shot by shot instead of being set for the entire program.

Similarly HDR+ is an attempt to add dynamic metadata to the HDR10 standard. Because PQ is absolute, the idea is that when watching content from one of these three standards and especially Dolby Vision, it should look the same in your living room, as it did to the show's creators when they were editing it. This is great in theory, but one downside is it doesn't actually take into account how bright your room with your projector or TV is.

And by default Dolby Vision is intended to be watched nearly in the dark. Originally it seemed like Dolby wanted the PQ curve to actually override your display's brightness settings, when playing back Dolby Vision video. So it perfectly matched the director's vision, but it doesn't look like any TV manufacturers decided to actually do that though. The downside of each of these standards is the content generally looks washed out and grayed out on older non-HDR projectors and TVs. Streaming services can usually identify what your device supports and serve up an SDR version as needed, but that's not possible in broadcast. Enter HLG.

HLG

HLG is our last HDR standard. It was cooked up by the UK’s BBC and Japan's NHK television networks to deliver HDR content via broadcast. HLG supports a wide color gamut and 10 bit video, but otherwise it's pretty different from the other standards.

HLG stands for hybrid log gamma, and that's essentially what it is. Each LG takes the standard gamma curve that's been used in broadcast for nearly a century, but towards the brighter end, it smoothly transitions into a logarithmic curve that compresses the highlights and allows for much greater dynamic range. The idea here is that older TVs will just interpret the part of the picture where the standard gamma curve is. And the highlights that would clip to white in normal standard dynamic range content will look the same, but in TVs and projectors that support HLG, they'll get more detail in the brightest parts of the image. The end result. Isn't always perfect on standard TVs. An HLG image can come through a little muted compared to true SDR content, but it's an easy way for broadcasters to start to transition into HDR broadcasts.

Summing It all up

So there you have it, the various standards and how they work. There are a few others floating around out there, but for now HDR10, HDR10+, Dolby Vision, and HLG are the ones to watch.

If you buy a HDR ready projector it almost certainly supports HDR10 and probably HLG, but Dolby vision and HDR10+ are more of an either or situation at least for now. In terms of content providers, Amazon says most of their HDR10 content is also available in 10+ and has some shows in Dolby Vision. While Netflix has pretty much skipped HDR10+ it has a lot of Dolby vision and HDR10 on offer. Blu-ray is a mix with some studios, just opting to include every format on the same disc, so check before you buy. And sadly Hulu has no HDR content for the moment.

If you want to buy a HDR projector now is the best time to do it. Shop our great selection of projectors or give us a call (888)392-4814